Crypto trading is no longer the exclusive domain of human intuition and manual chart analysis. The rise of reinforcement learning trading bots and LSTM neural network crypto bots is fundamentally changing how traders interact with digital markets. These AI-driven systems are not just automating trades – they are actively learning, adapting, and optimizing strategies in real time, delivering a new level of sophistication to both retail and institutional investors.

Reinforcement Learning: The Engine Behind Self-Learning Trading Agents

Reinforcement learning (RL) is at the heart of today’s most advanced crypto trading bots. Unlike static rule-based systems, RL agents learn optimal trading behaviors by interacting with the market environment, receiving rewards for profitable actions and penalties for mistakes. This trial-and-error approach enables them to discover nuanced strategies that outperform traditional algorithms in volatile conditions.

The EarnHFT framework, for example, applies hierarchical RL to high-frequency trading scenarios, showing measurable profitability gains over legacy models. RL-based bots can adapt their risk appetite as volatility spikes or liquidity dries up – a crucial advantage in the 24/7 world of digital assets.

LSTM Neural Networks: Unlocking Deep Pattern Recognition

While RL governs decision-making, LSTM (Long Short-Term Memory) neural networks provide these bots with predictive foresight. LSTMs are a specialized form of recurrent neural networks designed to capture long-range dependencies in sequential data – exactly what is needed for financial time series analysis.

Projects like the open-source AI Trading Bot use LSTMs to forecast future prices by recognizing subtle patterns across historical price movements and volume data. This deep pattern recognition allows AI agents to anticipate market swings before they become obvious to discretionary traders or simpler algorithms.

Key Advantages of LSTM Networks in Crypto Trading Bots

-

Capturing Long-Term Market Trends: LSTM networks excel at recognizing temporal dependencies in sequential data, enabling trading bots to identify and leverage long-term patterns in cryptocurrency price movements. This capability is crucial for forecasting future trends based on historical data, as demonstrated by projects like AI Trading Bot.

-

Handling Noisy and Volatile Data: Cryptocurrency markets are highly volatile and prone to noise. LSTM networks are designed to filter out irrelevant fluctuations and focus on meaningful signals, improving the reliability of trading decisions in unpredictable environments. This robustness is highlighted in research such as A survey of deep learning applications in cryptocurrency.

-

Enhancing Predictive Accuracy: By maintaining memory of previous states, LSTM-based bots achieve higher accuracy in predicting short-term and long-term price movements. This leads to improved trade execution and profitability, as seen in the LSTM-Algorithmic-Trading-Bot for Bitcoin.

-

Supporting Adaptive Learning: LSTM networks can be integrated with reinforcement learning algorithms to create bots that continuously adapt to changing market conditions. This synergy allows bots to refine their strategies over time, as exemplified by the Recurrent Reinforcement Learning Crypto Agent project.

-

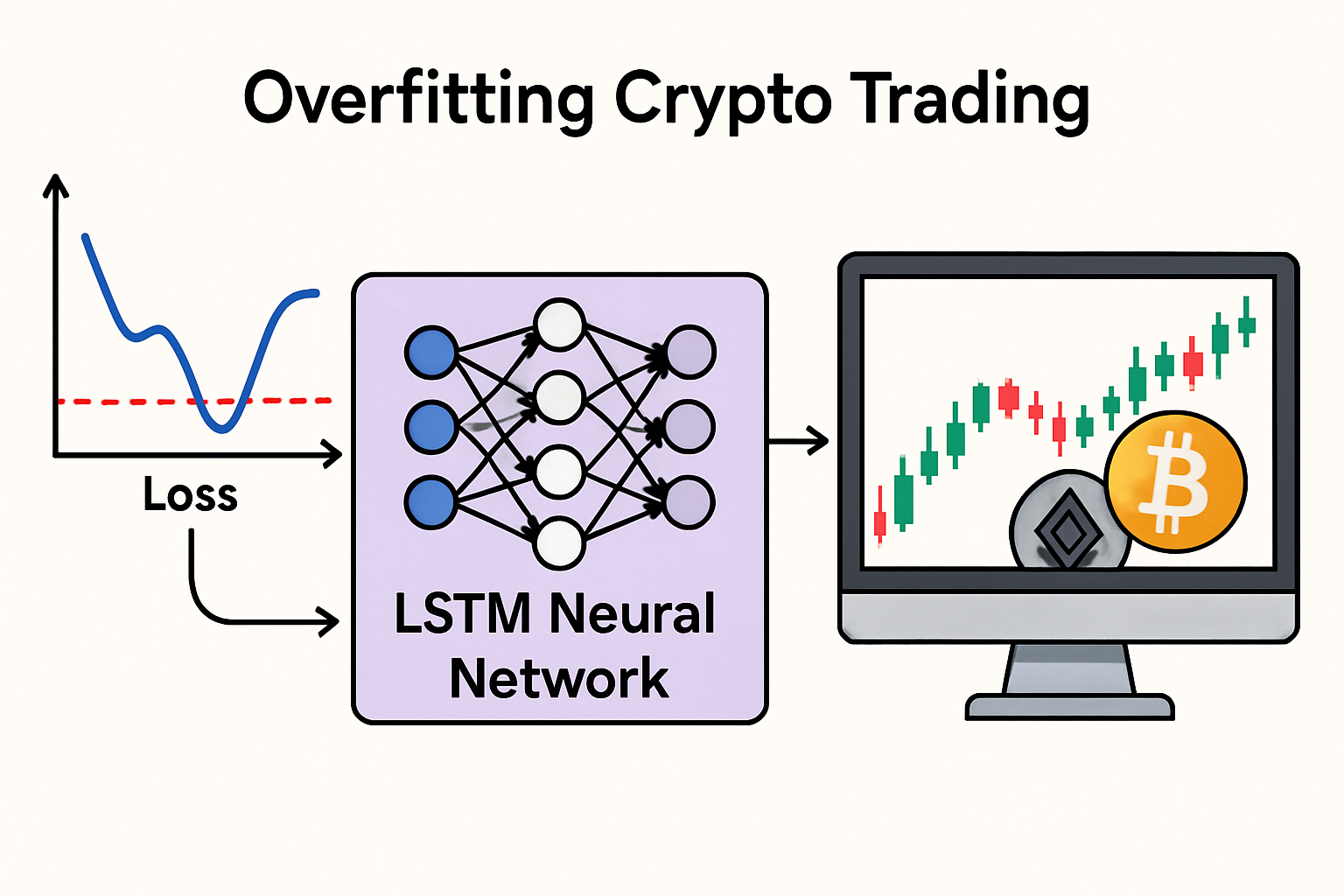

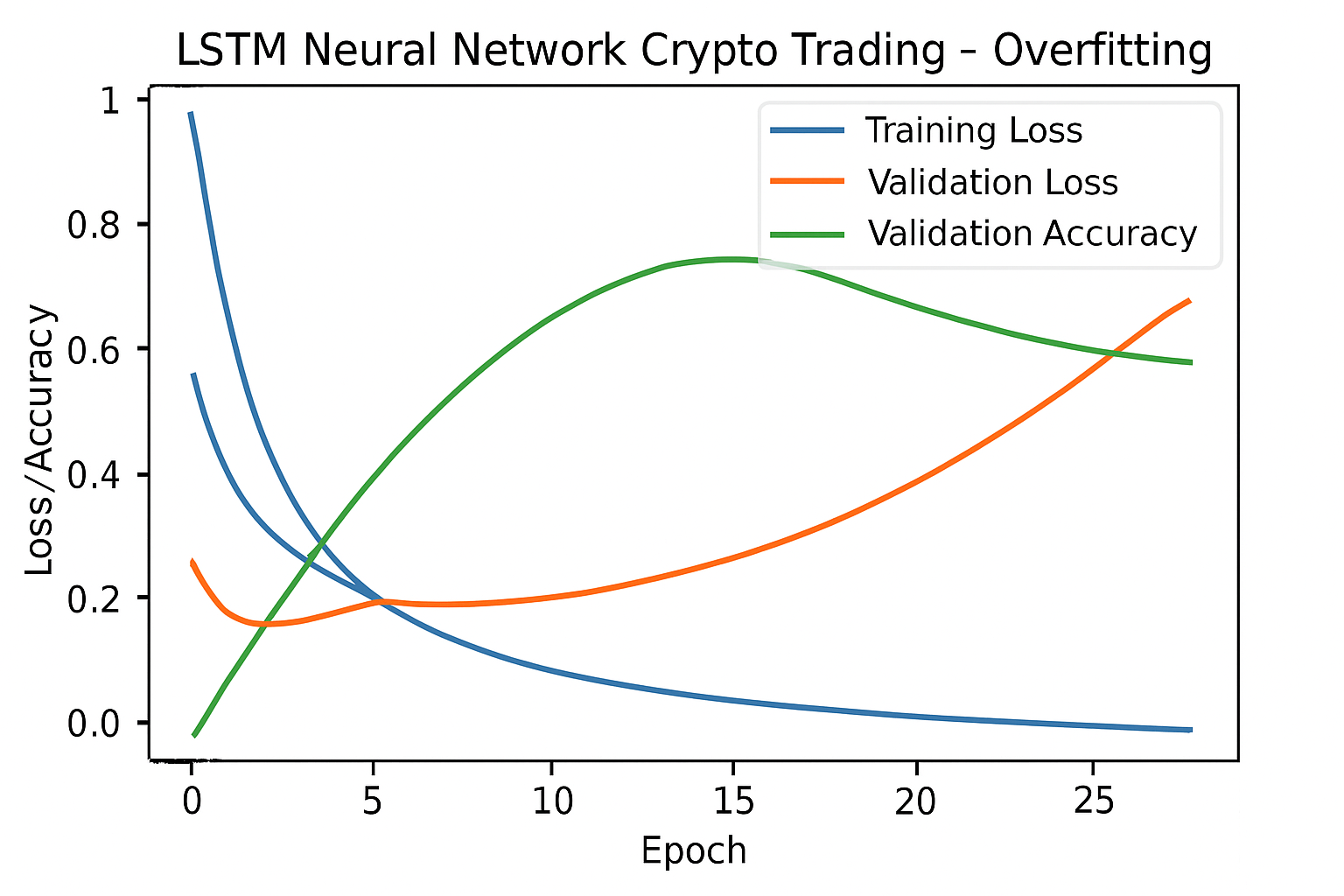

Reducing Overfitting in Financial Time Series: LSTM architectures are less susceptible to overfitting compared to traditional neural networks, especially when dealing with complex, non-stationary crypto market data. This results in more generalizable models capable of performing well on unseen market scenarios, as discussed in A survey of deep learning applications in cryptocurrency.

The Synergy: Integrating RL and LSTM for Real-Time Portfolio Optimization AI

The real breakthrough comes when RL and LSTM models are combined into a unified agentic DeFi technology stack. Here’s how it works: the LSTM component continuously analyzes market history to generate predictive signals, while the RL agent uses these signals as part of its state representation to decide when to buy, sell, or hold.

The Recurrent Reinforcement Learning Crypto Agent is a prime example. By leveraging both an echo state network (for memory) and a recurrent RL policy, this agent achieved robust returns across multiple years of live crypto market data – demonstrating adaptability that static models simply cannot match.

“Unlike traditional quant strategies that require frequent manual recalibration, self-learning trading agents built on RL-LSTM architectures autonomously evolve as new information flows into the market. “

What sets these next-gen bots apart is their ability to continuously optimize portfolios in real time, even as market regimes shift. This is critical in an environment where Bitcoin, Ethereum, and altcoins can experience double-digit swings within hours. RL-LSTM agents monitor not only price action but also liquidity, order book depth, and macro signals, adjusting position sizing and stop-loss levels dynamically. This kind of adaptive risk management was nearly impossible with legacy systems that relied on static parameters or lagging indicators.

Recent research has shown that deep learning autonomous trading stacks outperform both traditional technical analysis and simpler machine learning models. In backtests and real-world deployments, AI adaptive trading strategies have delivered higher Sharpe ratios and lower maximum drawdowns than rule-based bots, especially during periods of heightened volatility. The integration of RL and LSTM enables a sort of “market intuition, ” letting bots learn from past shocks to better anticipate future ones.

Challenges and Considerations: What Traders Should Know Before Deploying AI Bots

Despite their promise, deploying self-learning trading agents is not without challenges. Training deep RL agents requires massive amounts of historical data and computational resources, especially when optimizing for multiple assets or cross-market correlations. Overfitting is a real risk; models that excel in backtesting can fail spectacularly when exposed to new market conditions if not properly regularized.

Transparency is another concern: the decision-making logic inside an LSTM or RL agent can be opaque compared to traditional strategies. This “black box” effect means traders must implement robust monitoring tools to detect drift or anomalous behavior before it impacts capital.

Common Pitfalls of AI-Powered Crypto Trading Bots

-

Overfitting to Historical Data: Many bots, especially those using LSTM neural networks, risk overfitting by learning patterns unique to past data that may not recur in future markets. This can lead to poor real-world performance despite strong backtest results.

-

Insufficient Real-Time Adaptation: While Reinforcement Learning (RL) algorithms are designed to adapt, some bots fail to adjust quickly enough to sudden market shifts, such as flash crashes or unexpected regulatory news, leading to significant losses.

-

Ignoring Transaction Costs and Slippage: Bots that do not accurately account for exchange fees, slippage, and liquidity constraints may report inflated profits in simulations but underperform in live trading. This is a common issue highlighted in research on DRL-based trading systems.

-

Reliance on Unreliable Data Sources: Using low-quality, delayed, or manipulated market data can mislead AI models, resulting in poor trading decisions. Ensuring data integrity is critical for both RL and LSTM-based bots.

-

Lack of Robust Risk Management: Some AI-powered bots, even those leveraging advanced RL strategies, may neglect proper risk controls such as stop-loss mechanisms, position sizing, or diversification, exposing users to outsized losses during volatile periods.

-

Limited Generalization Across Market Regimes: Bots trained on specific market conditions (e.g., bull or bear markets) may struggle to generalize when the regime shifts, a challenge noted in studies of deep learning applications in cryptocurrency trading.

For best results, forward-thinking traders are combining the strengths of RL-LSTM agents with human oversight, using dashboards for live analytics, alerting mechanisms for outlier trades, and periodic retraining protocols to ensure models stay relevant as the crypto landscape evolves.

The Future: Agentic DeFi Technologies and MT5 AI Stacks

The next evolution will see these technologies integrated directly into agentic DeFi protocols and multi-asset platforms like MT5 AI trading stacks. Imagine a world where your portfolio is managed by an ensemble of specialized agents, one tuned for high-frequency BTC scalping, another for long-term ETH momentum, all working in concert via smart contracts that enforce risk controls autonomously.

This vision is already taking shape as open-source communities and institutional quants collaborate on frameworks that blend reinforcement learning, LSTMs, and other deep learning innovations. As more data becomes available, from on-chain analytics to social sentiment, these self-learning agents will only get smarter, faster, and more responsive.

“Let data drive your edge. ” The age of intuition-driven crypto speculation is giving way to a new paradigm: one powered by relentless machine learning optimization and real-time adaptation. For those ready to embrace it, the rewards could be transformative.