Imagine deploying a trading bot that learns from the chaos of crypto futures markets, adapting in real-time to snatch profits from volatility. With Bitcoin hovering at $70,526.00 amid a 24-hour gain of and $1,915.00, Hyperliquid’s perpetual futures scene is ripe for reinforcement learning (RL) autonomous agents. These bots don’t just follow rules; they evolve strategies through trial and error, rewarding winning trades while penalizing losses. If you’re eyeing a reinforcement learning trading bot hyperliquid setup, you’re in the right place to build one that thrives on this DEX powerhouse.

Hyperliquid stands out in DeFi by mimicking centralized exchange speed on its own Layer-1 chain. Self-custody via MetaMask means you keep keys while trading leveraged longs and shorts on crypto pairs, even commodities like gold and silver. High throughput handles massive volumes without slippage headaches. Fees tier down with your 14-day volume, and post-liquidation margin hikes keep things stable. For an autonomous crypto futures bot, this infrastructure is gold: low latency for RL agents to act on fleeting signals.

Why Reinforcement Learning Powers the Smartest Hyperliquid Bots

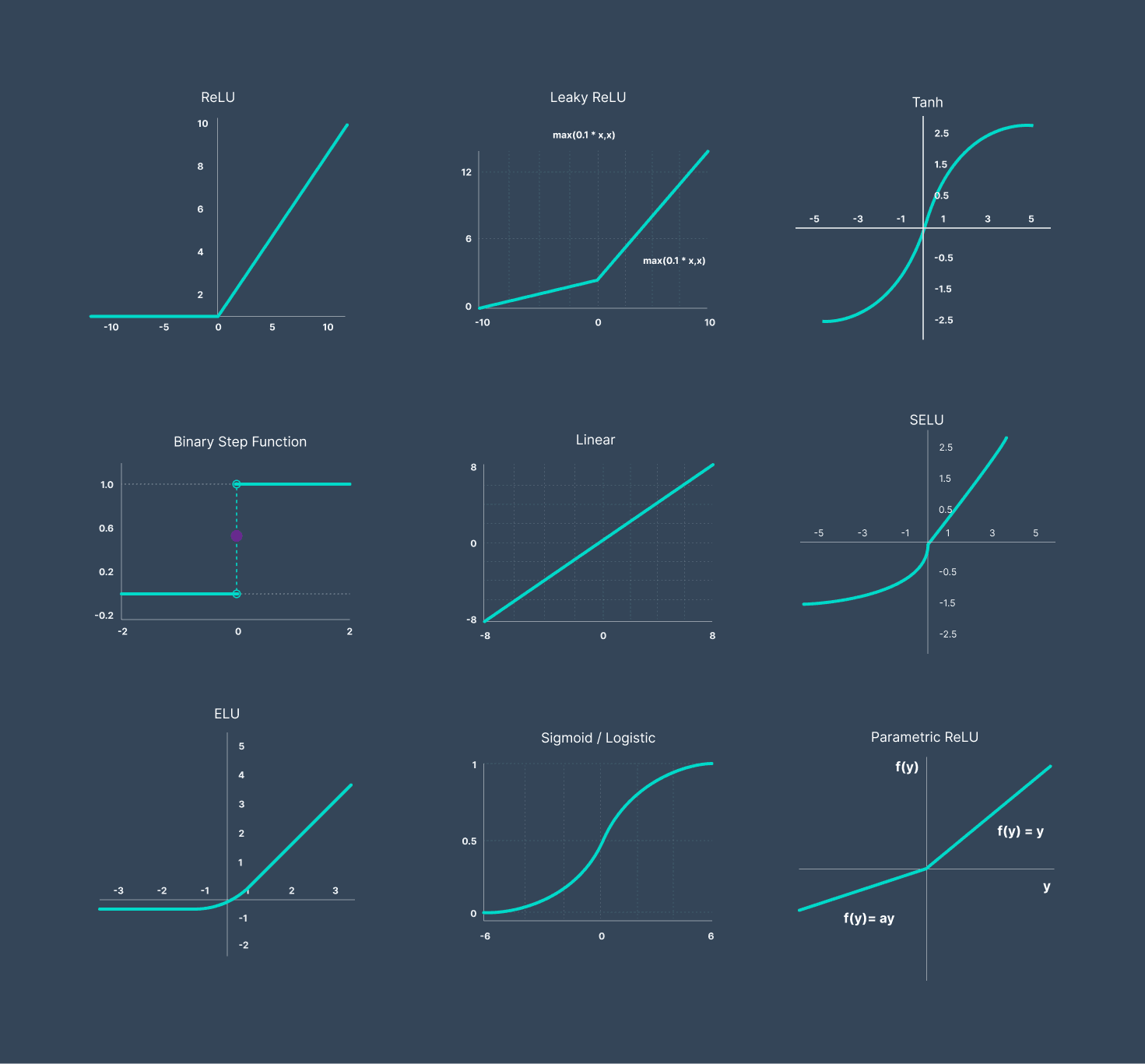

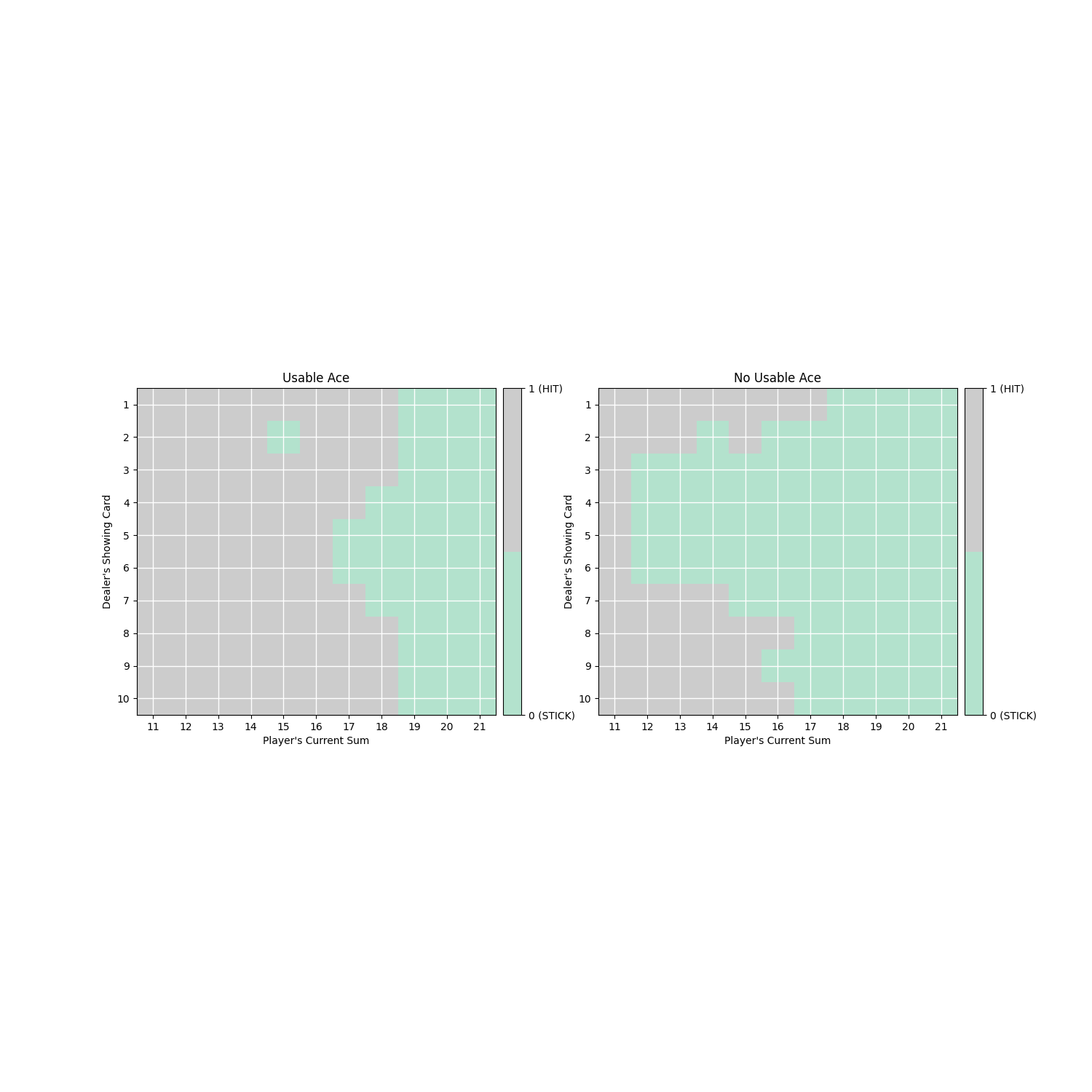

Traditional bots rely on static indicators like RSI or moving averages, but markets shift fast. RL flips the script. Picture an agent in a simulated environment: states are price action, order book depth, your BTC at $70,526.00, and open positions. Actions? Buy, sell, hold, or adjust leverage. Rewards come from profit minus fees and drawdowns. Over episodes, the bot masters nuanced plays, like fading pumps during overbought squeezes.

Open-source gems accelerate this. Freqtrade’s Python framework supports major exchanges and Telegram control, perfect for RL backtesting. SimSimButDifferent’s HyperLiquidAlgoBot nails perpetuals with automated optimization. Then there’s cgaspart’s HL-Delta for delta-neutral spots-perps plays. These aren’t RL natives, but they feed into your agent’s data pipeline. GitHub’s reinforcement_learning_trading_agent repo offers deep RL blueprints, adaptable to Hyperliquid’s API for market data pulls and order execution.

Setting Up Your RL Environment for Hyperliquid Futures

Start with Python stacks: Gym for environments, Stable Baselines3 or Ray RLlib for algorithms like PPO or DQN. Fetch Hyperliquid data via their API – spot prices, funding rates, liquidations. Craft your state space: include BTC’s 24h high of $70,621.00 and low $67,642.00 for volatility context. Normalize inputs to tame wild swings.

Training loop basics: replay historical bars, simulate trades with slippage and fees. Hyperliquid’s tiered structure means high-volume bots slash costs, so model volume tiers. Risk management is non-negotiable – embed max drawdown penalties in rewards. Tools like Coinrule let no-coders prototype RL-inspired rules, testing entries/exits across exchanges including Hyperliquid.

Bitcoin (BTC) Price Prediction 2027-2032

Forecasts in the era of reinforcement learning autonomous trading bots on Hyperliquid and advancing crypto futures markets

| Year | Minimum Price | Average Price | Maximum Price | YoY % Change (Avg) |

|---|---|---|---|---|

| 2027 | $75,000 | $120,000 | $180,000 | +41% |

| 2028 | $110,000 | $200,000 | $350,000 | +67% |

| 2029 | $150,000 | $280,000 | $500,000 | +40% |

| 2030 | $200,000 | $400,000 | $700,000 | +43% |

| 2031 | $280,000 | $550,000 | $950,000 | +38% |

| 2032 | $350,000 | $750,000 | $1,200,000 | +36% |

Price Prediction Summary

Bitcoin is projected to see robust long-term growth from 2027 to 2032, with average prices climbing from $120,000 to $750,000, fueled by the 2028 halving, institutional inflows, regulatory progress, and AI-enhanced trading efficiency on platforms like Hyperliquid. Min/max ranges reflect bearish corrections and bullish surges amid market cycles.

Key Factors Affecting Bitcoin Price

- 2028 Bitcoin halving reducing new supply and historically driving bull markets

- Institutional adoption via ETFs, corporate treasuries, and nation-state reserves

- Regulatory developments fostering clearer frameworks and mainstream integration

- Technological advancements in scaling (Layer-2), DeFi, and RL-based autonomous trading bots on Hyperliquid DEX

- Macro factors positioning BTC as inflation hedge amid global economic shifts

- Persistent BTC dominance despite altcoin competition, with futures trading innovations boosting liquidity

Disclaimer: Cryptocurrency price predictions are speculative and based on current market analysis.

Actual prices may vary significantly due to market volatility, regulatory changes, and other factors.

Always do your own research before making investment decisions.

Hyperliquid’s API docs guide order placement: market, limit, stop-losses. Integrate WebSocket streams for live feeds. Your RL AI trading agent hyperliquid observes, acts, learns. Backtest ruthlessly on 2024 dumps and pumps. Freqtrade shines here, with hyperopt for strategy tuning. YouTube tutorials from Robot Traders walk Python builds for multi-coin bots, ripe for RL upgrades.

Key RL Strategies Tailored for Hyperliquid Perps

Dive into hyperliquid reinforcement learning strategies. Momentum chasing: agent learns to ride BTC surges past $70,526.00, exiting on funding rate spikes. Mean reversion bets on overextensions from 24h lows. Multi-agent setups compete internally, evolving ensemble trades. Delta-neutral like HL-Delta bots hedge spots against perps, RL optimizing ratios dynamically.

Funding rate arbitrage shines on Hyperliquid, where RL agents spot divergences between spot and perps, scaling positions to capture premiums without directional bets. I’ve seen these setups turn sleepy markets into steady earners, especially when BTC consolidates around $70,526.00 after dipping to that 24h low of $67,642.00.

Coding the Core: A PPO Agent for Hyperliquid

Let’s get hands-on with a Proximal Policy Optimization (PPO) agent, my go-to for balancing exploration and exploitation in volatile perps. You’ll need Gymnasium for the env, Stable Baselines3 for PPO, and Hyperliquid’s SDK for API hooks. State vector: OHLCV, funding rates, open interest, your equity curve. Action space: discrete for buy/sell/hold, continuous for position sizing up to 10x leverage.

Basic PPO RL Trading Agent with Hyperliquid Integration

Hey there, future trading bot master! 🚀 Now we’re getting to the exciting part: building a basic PPO reinforcement learning agent using Stable Baselines3, integrated with the Hyperliquid API for crypto futures. PPO is fantastic for trading because it balances exploration and exploitation smoothly. We’ll define a custom Gymnasium environment where the state captures market dynamics (OHLCV history, position, balance), actions are position sizes, and rewards encourage profitable, low-risk trades. I’ve included placeholders for the real Hyperliquid SDK – just pip install it and plug in your keys! Let’s code this up and empower your bot to learn autonomously.

import gymnasium as gym

from gymnasium import spaces

import numpy as np

from stable_baselines3 import PPO

from stable_baselines3.common.env_util import make_vec_env

# Placeholder for Hyperliquid SDK (pip install hyperliquid-python-sdk)

# from hyperliquid.info import Info

# from hyperliquid.exchange import Exchange

class HyperliquidTradingEnv(gym.Env):

"""

Custom Gymnasium environment for Hyperliquid crypto futures trading.

State: normalized OHLCV data (20 timesteps x 5 features) + position + balance.

Action: continuous position size (-1 full short, 0 neutral, +1 full long).

"""

def __init__(self, symbol='BTC-USD', wallet_address=None, private_key=None):

super(HyperliquidTradingEnv, self).__init__()

self.symbol = symbol

# self.info = Info(wallet_address, skip_ws=True) # For market data

# self.exchange = Exchange(wallet_address, private_key) # For trading

# Observation space: 20 timesteps * 5 (OHLCV normalized) + 2 (position, balance)

self.observation_space = spaces.Box(low=-2, high=2, shape=(102,), dtype=np.float32)

self.action_space = spaces.Box(low=-1.0, high=1.0, shape=(1,), dtype=np.float32)

self.current_step = 0

self.max_steps = 1000

self.position = 0.0

self.balance = 10000.0 # Starting balance in USDC

self.price_history = [] # Will hold OHLCV data

def reset(self, seed=None, options=None):

super().reset(seed=seed)

self.current_step = 0

self.position = 0.0

self.balance = 10000.0

# Fetch initial market data from Hyperliquid API

# self.price_history = self._fetch_ohlcv(self.symbol, limit=50)

self.price_history = np.random.rand(50, 5) * 2 - 1 # Mock data for demo

obs = self._get_observation()

return obs, {"episode": 0}

def step(self, action):

self.current_step += 1

prev_price = self._current_price()

# Execute trade (placeholder for API call)

# self.exchange.order(...) # Market order based on action

self.position = np.clip(action[0], -1, 1)

current_price = self._next_price() # Simulate/ fetch next price

# Calculate P&L

pnl = self.position * (current_price - prev_price) / prev_price * self.balance * 0.1

self.balance += pnl

# Reward: Sharpe-like (pnl - volatility penalty)

reward = pnl - np.std(self.price_history[-10:]) * 0.1

terminated = self.balance < 100 or self.current_step >= self.max_steps

truncated = False

obs = self._get_observation()

info = {"balance": self.balance, "position": self.position}

return obs, reward, terminated, truncated, info

def _get_observation(self):

# Last 20 timesteps OHLCV (100 feats) + position + balance (norm)

ohlcv_slice = self.price_history[-20:].flatten()

state = np.concatenate([ohlcv_slice, [self.position / 1.0, np.log(self.balance + 1e-6)]])

return state.astype(np.float32)

def _current_price(self):

return self.price_history[-1, 0] if len(self.price_history) > 0 else 1.0

def _next_price(self):

# Simulate price movement or fetch from API

new_candle = np.random.rand(5) * 2 - 1

self.price_history.append(new_candle)

if len(self.price_history) > 50:

self.price_history.pop(0)

return new_candle[0]

# Usage: Setup and train the agent

if __name__ == "__main__":

env = make_vec_env(HyperliquidTradingEnv, n_envs=4, env_kwargs={'symbol': 'BTC-USD'})

model = PPO('MlpPolicy', env, verbose=1, n_steps=2048, batch_size=64, n_epochs=10,

learning_rate=3e-4, tensorboard_log="./tb_logs/")

# Train the agent

model.learn(total_timesteps=100000, progress_bar=True)

model.save('ppo_hyperliquid_trader')

print('Training complete! Your bot is ready to trade Hyperliquid futures.')Boom! You’ve just set up a full PPO trading agent. Fire up that training loop with `model.learn()`, monitor with TensorBoard, and tweak hyperparameters to crush the markets. Pro tip: Backtest on historical data first, then go live with paper trading. You’re not just coding – you’re unleashing an intelligent trader. What’s next? Custom features like TA indicators? You’ve got this! 💪📈

Train offline first on historical data – replay BTC’s recent climb from $67,642.00 to $70,621.00, factoring real fees and slippage. Once Sharpe ratios climb above 1.5 in backtests, paper trade live. Freqtrade’s strategy tester pairs perfectly, letting you hot-swap RL policies.

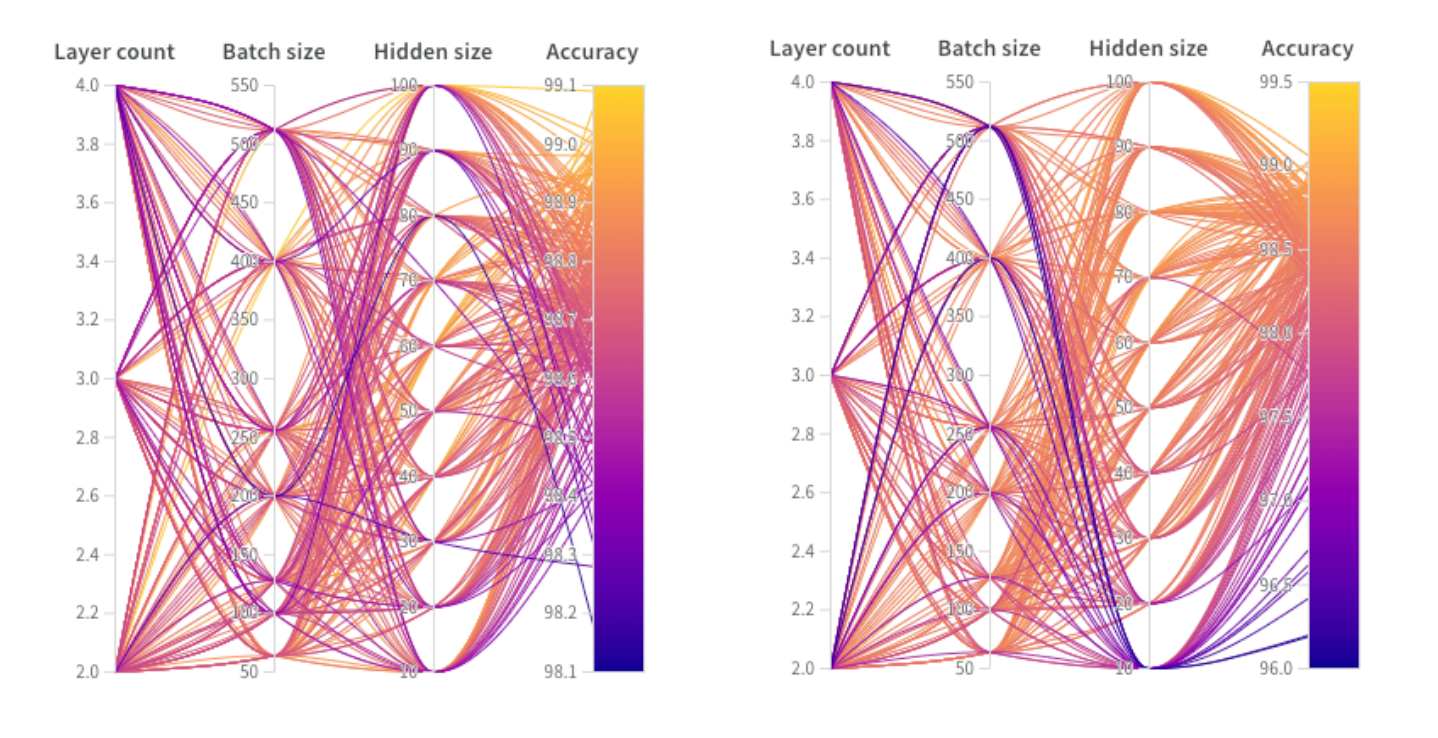

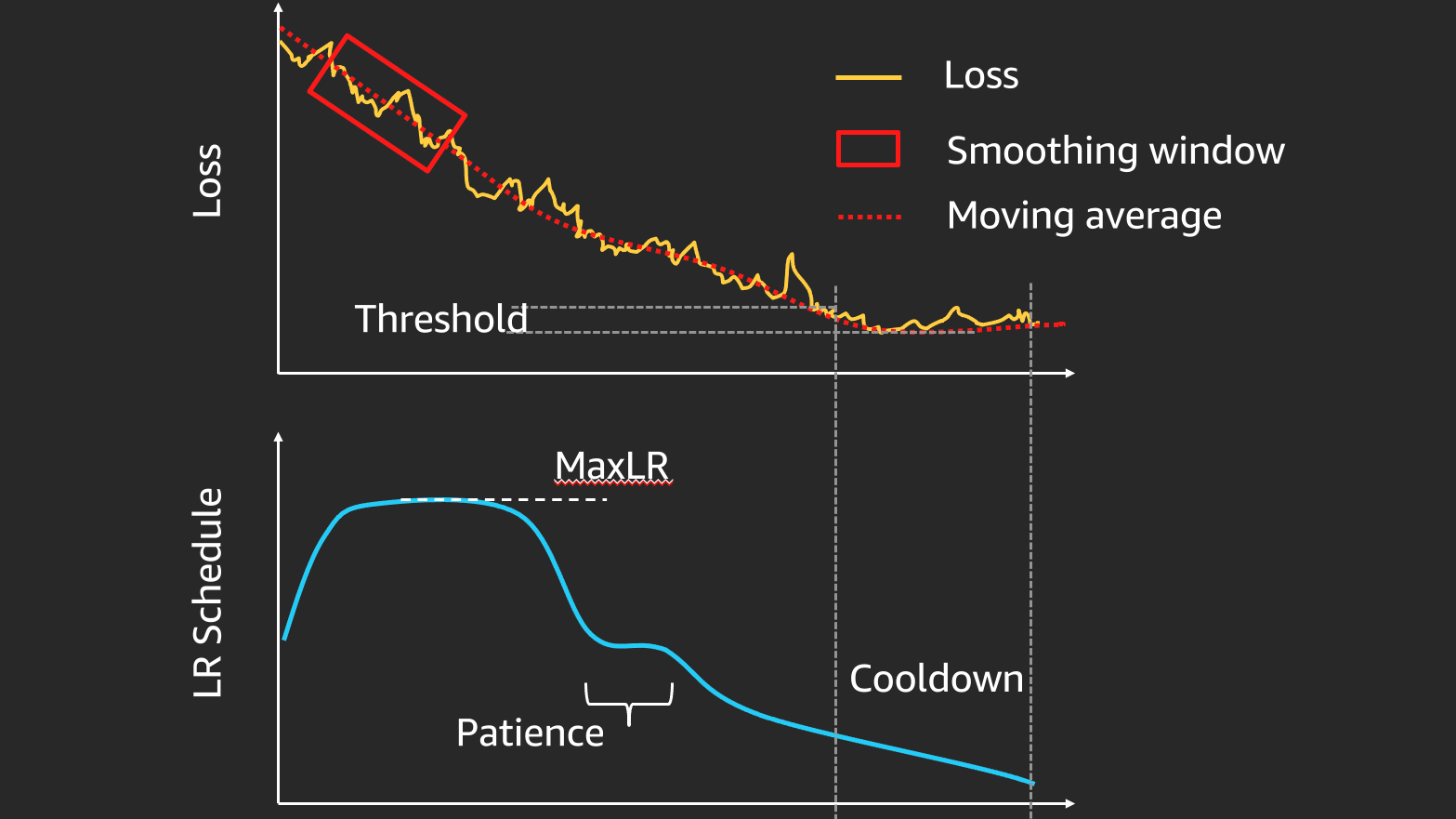

Hyperparameters That Make or Break Your Bot

Essential RL Hyperparams for Hyperliquid

-

Learning Rate: 0.0003 – Fine-tunes how quickly your bot learns from trades. Ideal for Hyperliquid’s high-speed perpetuals to avoid overshooting in volatile crypto futures.

-

Gamma: 0.99 – Discount factor balancing short vs. long-term rewards. Keeps your bot patient amid BTC swings on Hyperliquid.

-

Clip Range: 0.2 – PPO staple to prevent big policy shifts. Stabilizes training for reliable entries/exits in futures trading.

-

Batch Size: 64 – Processes 64 trades at once for efficient updates. Scales well with Hyperliquid’s throughput without overwhelming your setup.

-

Epochs: 10 – Number of passes over data per update. Balances speed and depth for quick adaptation to market shifts.

-

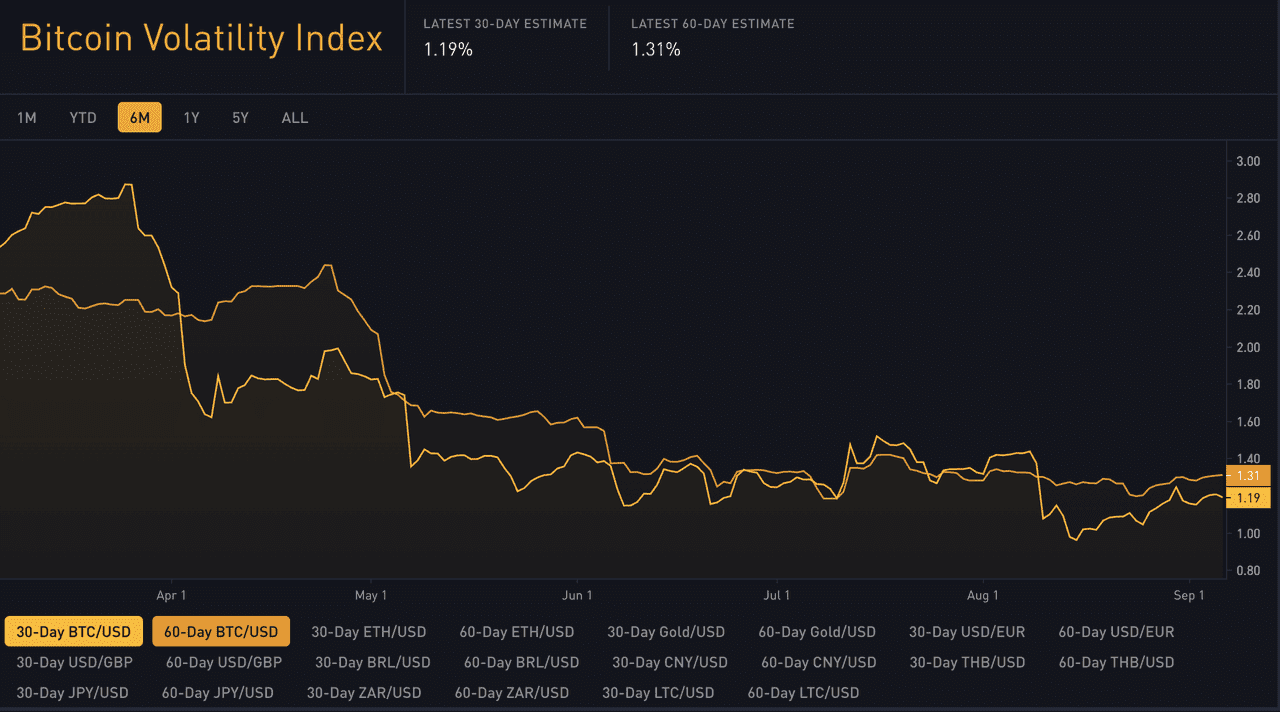

BTC Volatility Tip: At $70,526 (24h high $70,621, low $67,642), tweak clip range up to 0.25 for choppy conditions—empower your bot to thrive!

Fine-tune these surgically. Too aggressive a learning rate, and your agent chases ghosts in BTC’s and $1,915.00 24h pump. Undercut gamma, and it ignores long-term rewards from funding plays. Ray RLlib’s Tune sweeps combos effortlessly, optimizing for Hyperliquid’s low-latency edge.

Watch those Robot Traders vids for the full Python walkthrough – they demystify multi-coin scaling, vital when Hyperliquid lists alts alongside BTC at $70,526.00. Adapt their scripts: swap in Hyperliquid endpoints for order books, add liquidation oracles to state.

Risks, Realities, and Live Deployment

No bot’s bulletproof, especially in crypto’s wilds. RL agents can overfit to bull runs like today’s and 0.0279% BTC nudge, crumbling on black swans. Hyperliquid’s margin ramps post-liquidations? Bake them into your penalty function. Monitor for concept drift – retrain weekly on fresh data from API streams.

Deployment checklist: VPS near Hyperliquid nodes for sub-10ms latency, API keys vaulted, position limits hardcoded. Start small – 0.1% portfolio per trade. Tools like TradeLab. ai’s no-code builder prototype ideas fast, then port to full RL. GitHub’s hyperliquid-ai-trading-bot integrates LLMs for signal boosts, hybridizing RL with ChatGPT sentiment scans.

Scale smart: ensemble agents, one for momentum, another delta-neutral ala HL-Delta. When BTC tests $70,621.00 highs again, your AI autonomous trading hyperliquid crypto fleet will compound edges others miss. Test on sims mirroring today’s range, deploy with confidence.

Hyperliquid’s throughput lets RL shine unthrottled. Pair it with self-custody, and you’re trading like whales minus the suits. Dive into these repos, tweak that PPO code, and launch your RL AI trading agent hyperliquid. The market waits for no one – but your bot? It’ll be ready.