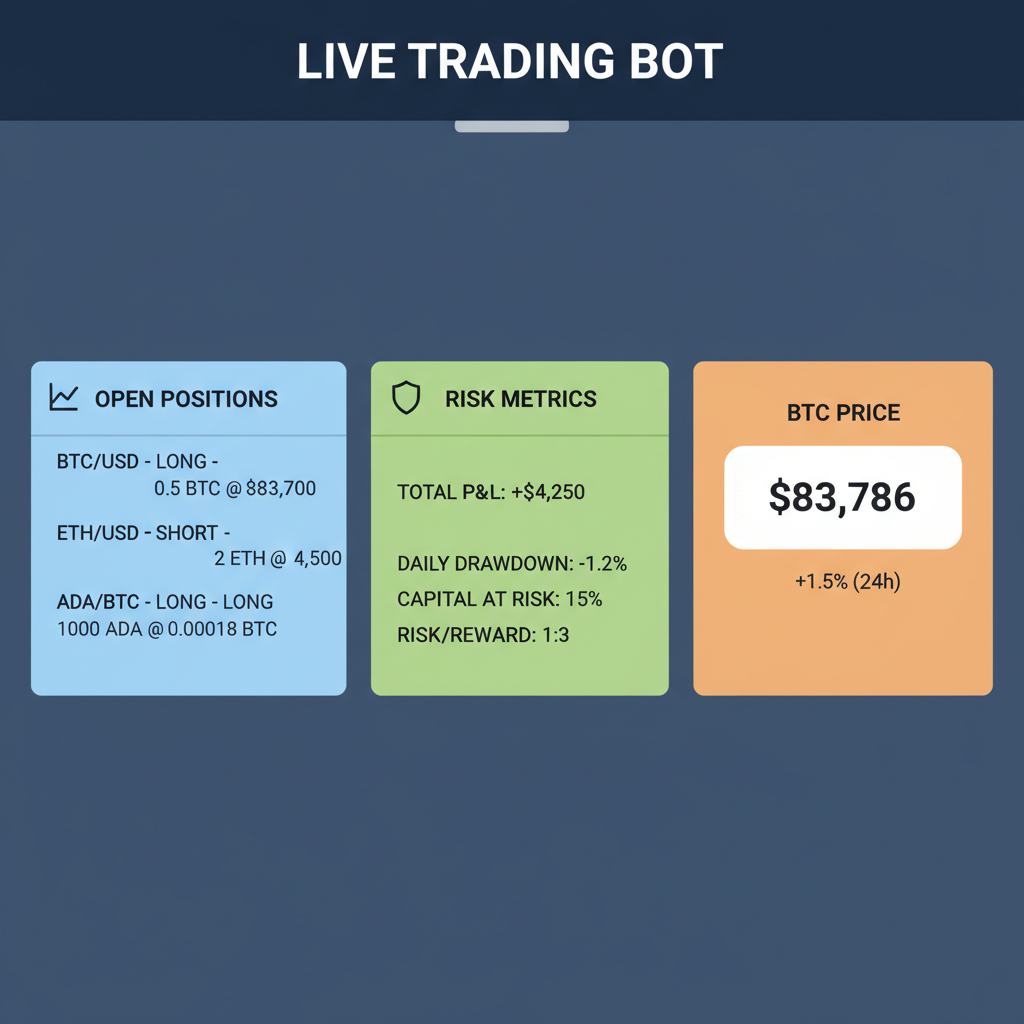

Bitcoin’s steady stance at $83,786.00 underscores the maturing crypto markets, yet perpetual futures on Hyperliquid demand precision few humans can sustain. This DEX’s volume exploded from $25.9 billion in June 2024 to $2.63 trillion by May 2025, fueling a boom in hyperliquid trading bot innovations. Among them, reinforcement learning crypto bot architectures stand out, training agents to navigate volatility through trial, reward, and adaptation. These autonomous AI trading hyperliquid systems learn optimal entry-exit points for perps, turning raw data into profitable edges without rigid rules.

Traditional bots falter in perps’ leverage-amplified swings, but reinforcement learning flips the script. Picture an agent exploring thousands of BTC-USD perp scenarios, rewarding long positions when momentum builds above $83,786.00 and punishing overexposure during dips to $81,169.00 lows. Platforms like Gridy. ai and HyperAgent pioneer this, blending no-code interfaces with deep RL models for AI agents hyperliquid perps.

Reinforcement Learning’s Edge in Perps Domination

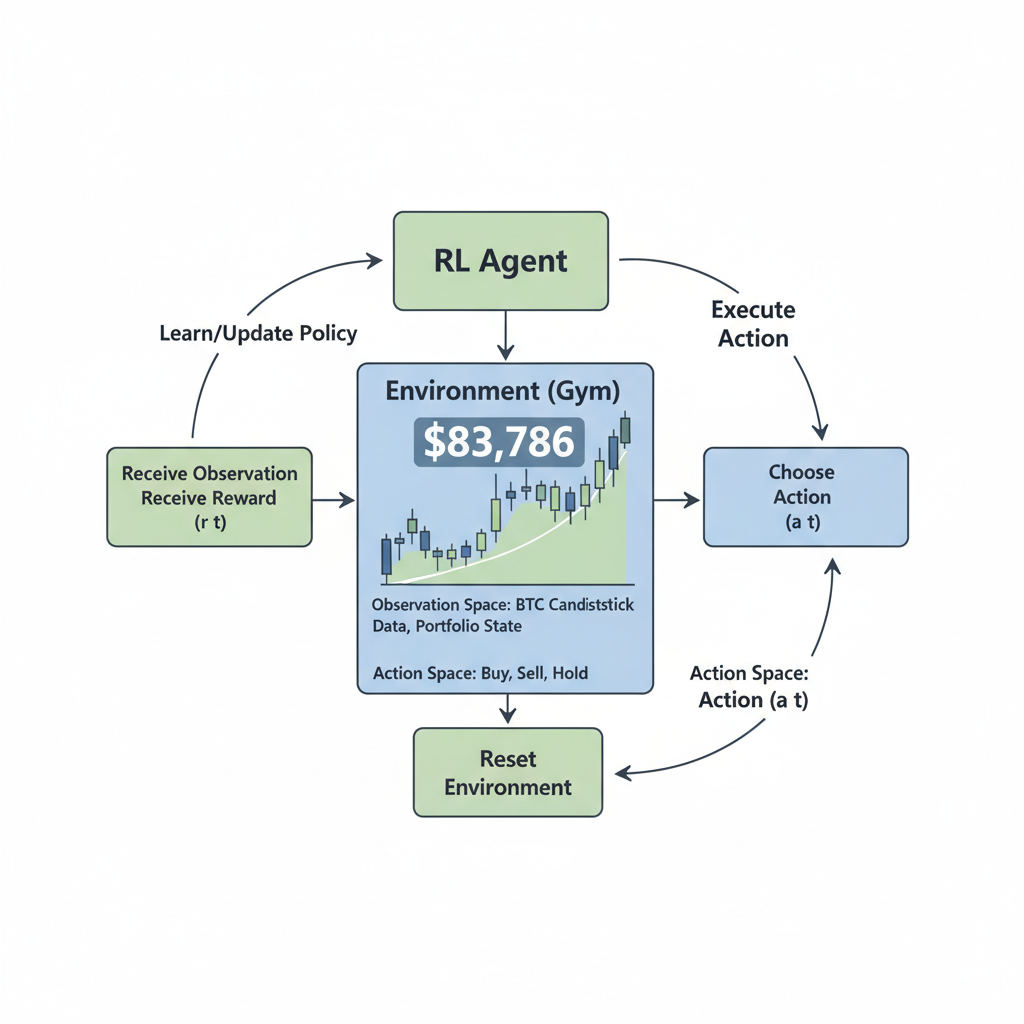

Why bet on RL for Hyperliquid? Simple: perps trading thrives on sequential decisions under uncertainty. Markov decision processes model this perfectly, where states reflect order book depth, funding rates, and BTC’s $83,786.00 anchor. An RL agent, via Q-learning or PPO algorithms, maximizes cumulative rewards over episodes mimicking 24-hour cycles.

Consider open-source sparks like SimSimButDifferent/HyperLiquidAlgoBot on GitHub. It optimizes automated execution for perps, a foundation ripe for RL upgrades. Freqtrade’s Python framework adds Telegram control, letting you backtest RL policies against Hyperliquid’s API. My strategic take: pair these with state-of-the-art ML from projects like Intelligent Trading Bot, which generates signals autonomously. Diversify to thrive – start with RL hyperparameters tuned for low-latency on-chain execution.

Hyperliquid’s surge demands bots that evolve, not just execute. RL agents adapt to regime shifts, like BTC’s tight 24h range from $81,169.00 to $84,587.00.

Critically, RL mitigates liquidation risks in leveraged perps. Agents learn conservative position sizing, dynamically adjusting based on volatility. In my 11 years blending assets, I’ve seen rigid strategies crumble; RL’s exploration-exploitation balance builds resilience.

Hyperliquid’s Bot Landscape: RL-Ready Tools Explode

Hyperliquid’s ecosystem teems with DeFi reinforcement learning bots and automation allies. Gridy. ai deploys grid strategies effortlessly, ideal for RL fine-tuning on range-bound BTC at $83,786.00. Perpsdotbot parses natural language into trades via Telegram, a gateway for voice-activated RL agents.

Hyper DeFAI offers real-time insights and customizable bots, optimizing risk for perps. Coinrule skips coding for rule-based automation, but savvy users layer RL atop. Mizar integrates DCA and copy-trading, while WunderTrading and GoodcryptoX handle futures grids and trailing stops – all Hyperliquid-compatible.

- Gunbot: On-chain perps support with strategy automation.

- 3Commas: 24/7 rule execution, primed for RL signals.

- HyperAgent: Institutional-grade with deterministic guards, echoing RL’s precision.

TradeLab. ai’s no-code builder imports strategies, perfect for prototyping RL policies without Python 3.8 and hurdles outlined in JustSteven’s Medium guide. Chainstack’s grid tutorials accelerate DFI setups. This arsenal positions Hyperliquid as crypto perp trading automation central.

Bitcoin (BTC) Price Prediction 2027-2032

Forecasts influenced by Hyperliquid perps momentum, AI trading bots surge, and current price of $83,786 with short-term targets of $85,000-$88,000 next week and $90,000 next month

| Year | Minimum Price (USD) | Average Price (USD) | Maximum Price (USD) |

|---|---|---|---|

| 2027 | $95,000 | $120,000 | $160,000 |

| 2028 | $130,000 | $200,000 | $300,000 |

| 2029 | $180,000 | $250,000 | $350,000 |

| 2030 | $220,000 | $320,000 | $450,000 |

| 2031 | $280,000 | $420,000 | $600,000 |

| 2032 | $350,000 | $550,000 | $800,000 |

Price Prediction Summary

Bitcoin’s price is expected to experience robust growth from 2027 to 2032, propelled by the explosive rise in Hyperliquid’s perpetual futures volume (from $25.9B to $2.63T), proliferation of AI-powered trading bots (e.g., Gridy.ai, Perpsdotbot, Hyper DeFAI), 2028 halving, and broader DeFi adoption. Average prices project a ~35% CAGR, ranging from conservative bearish mins to optimistic bullish maxes amid market cycles.

Key Factors Affecting Bitcoin Price

- Hyperliquid DEX volume surge and AI bot ecosystem growth enhancing liquidity and perps trading

- Bitcoin halving in 2028 increasing scarcity and historical bull cycles

- Institutional adoption via ETFs and regulatory progress

- Technological advancements in autonomous trading agents and DeFi automation

- Macroeconomic trends, competition from altcoins, and global adoption rates influencing volatility

Disclaimer: Cryptocurrency price predictions are speculative and based on current market analysis.

Actual prices may vary significantly due to market volatility, regulatory changes, and other factors.

Always do your own research before making investment decisions.

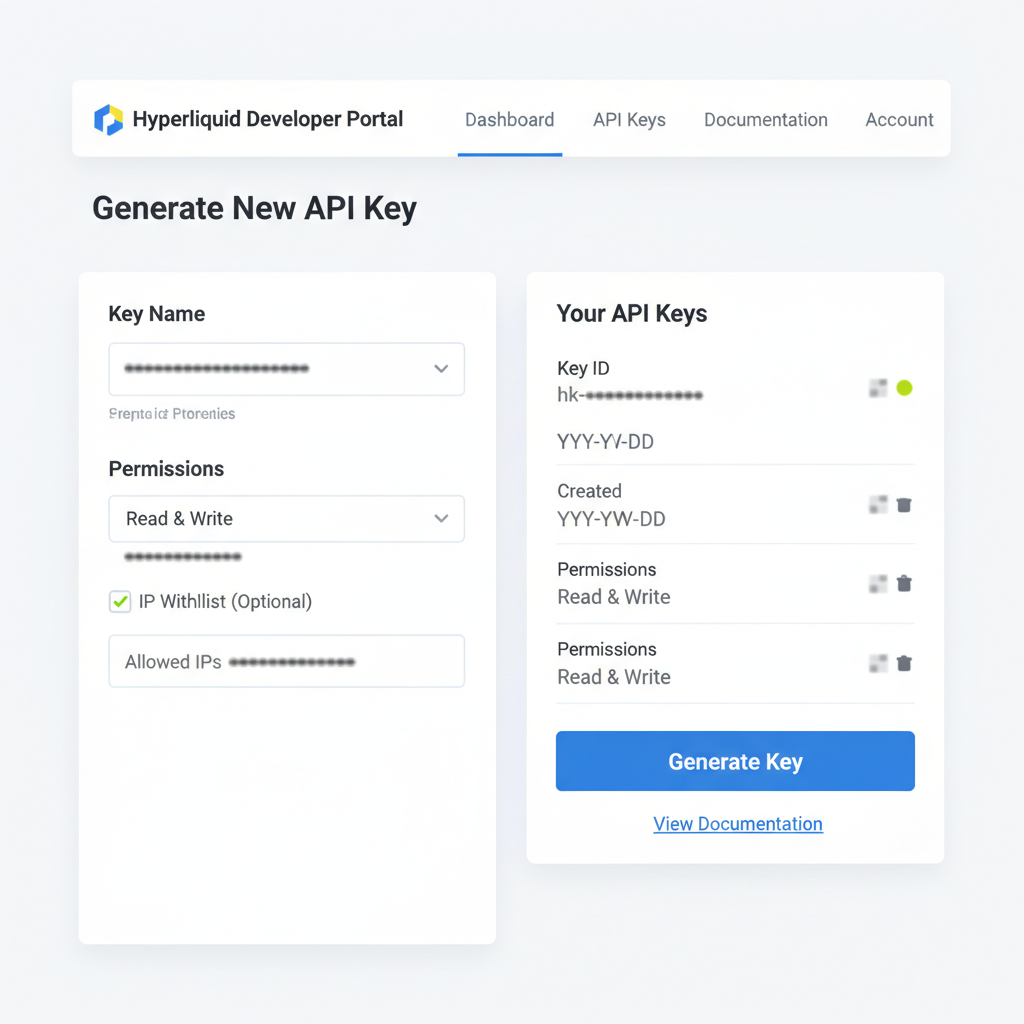

Blueprint for Your RL Agent on Hyperliquid

Building starts with API mastery. Hyperliquid’s endpoints feed order books and positions into your RL environment. Use Python libraries from WunderTrading guides: install ccxt or hyperliquid-py, authenticate with wallet keys. Define the state space – BTC price at $83,786.00, leverage levels, unrealized PnL.

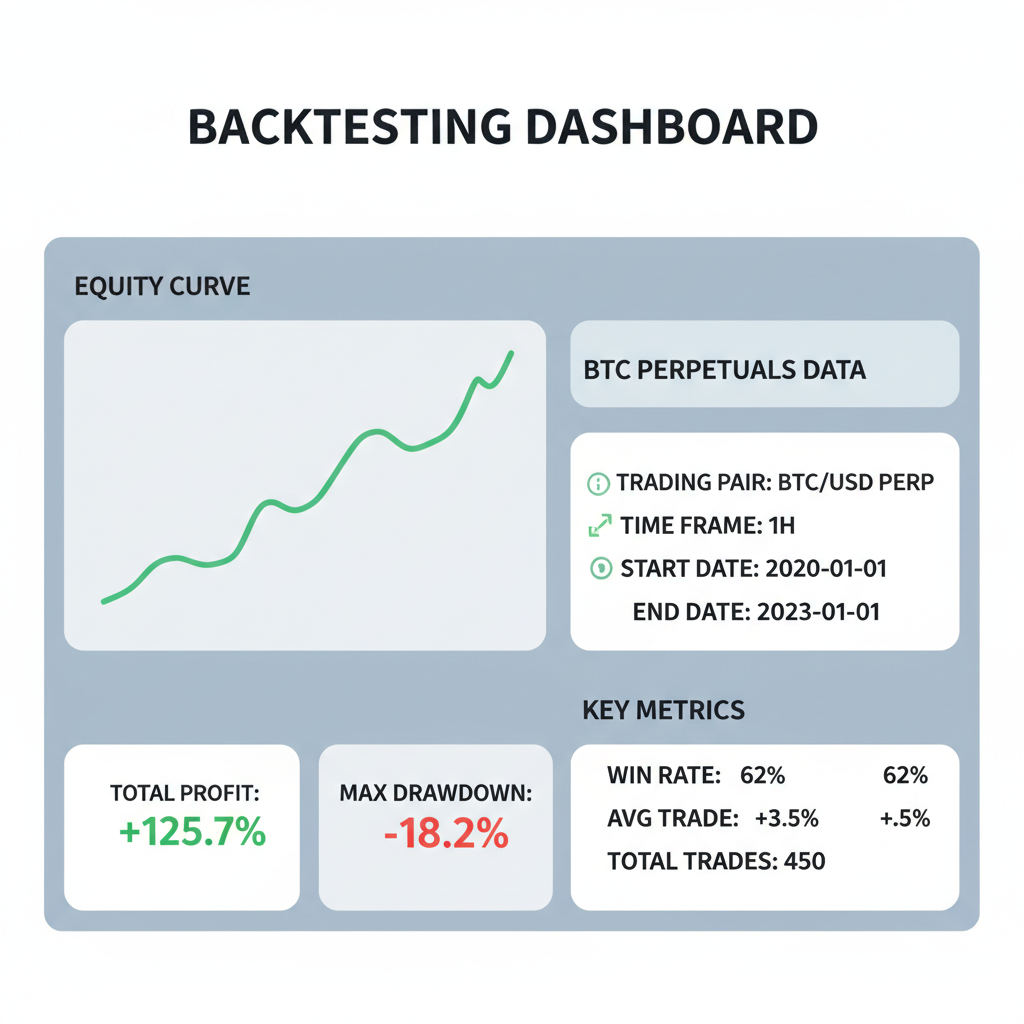

Action space? Long/short orders, sizes scaled to account equity. Reward function penalizes drawdowns, bonuses profitable closes. Train offline on historical perps data, deploy live with Freqtrade’s backtester. Opinion: prioritize off-policy RL like SAC for sample efficiency in sparse-reward perps.

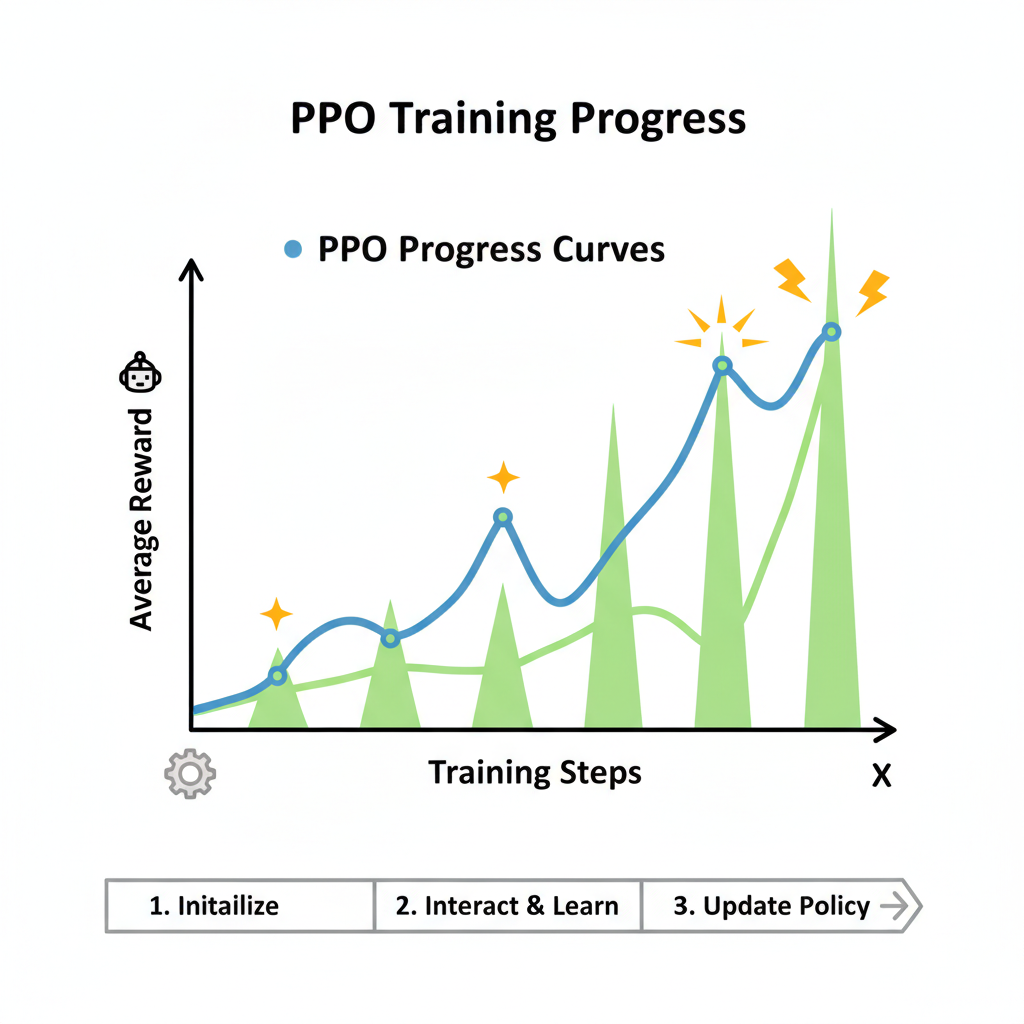

Let’s sketch a minimal viable RL loop. Observe BTC hovering at $83,786.00, feed it into your Gym environment alongside perp funding rates. Proximal Policy Optimization (PPO) shines here, balancing exploration in Hyperliquid’s high-throughput order flow.

Basic RL Trading Environment and PPO Agent Setup

Let’s build a foundational reinforcement learning trading agent for Hyperliquid BTC perpetuals. This custom Gym environment defines the state with the current BTC price (initially $83,786.00), account balance, and position size. It simulates price movements and discrete actions (hold, buy, sell), rewarding profitable positions while penalizing excessive trading.

import gym

import numpy as np

from gym import spaces

from stable_baselines3 import PPO

class HyperliquidTradingEnv(gym.Env):

"""

Custom Gym environment for Hyperliquid BTC perps trading.

State: [current_price, balance, position]

Actions: 0 - hold, 1 - buy 1 unit, 2 - sell 1 unit

"""

def __init__(self):

super(HyperliquidTradingEnv, self).__init__()

self.action_space = spaces.Discrete(3)

self.observation_space = spaces.Box(low=0, high=np.inf, shape=(3,), dtype=np.float32)

self.current_price = 0.0

self.balance = 10000.0

self.position = 0.0

self.current_step = 0

self.max_steps = 1000

def reset(self, seed=None, options=None):

super().reset(seed=seed)

self.current_price = 83786.00

self.balance = 10000.0

self.position = 0.0

self.current_step = 0

obs = np.array([self.current_price, self.balance, self.position], dtype=np.float32)

return obs, {}

def step(self, action):

self.current_step += 1

# Simulate realistic price movement (1% volatility)

price_change = np.random.normal(0, 0.01 * self.current_price)

self.current_price = max(self.current_price + price_change, 1000.0)

# Execute trade (1 unit size, simplified no fees/slippage)

size = 1.0

if action == 1: # Buy

cost = size * self.current_price

if self.balance >= cost:

self.balance -= cost

self.position += size

elif action == 2: # Sell

if self.position >= size:

self.balance += size * self.current_price

self.position -= size

# Reward: normalized PnL from position + step penalty

pnl = self.position * (self.current_price - 83786.00)

reward = pnl / 10000.0 - 0.001

terminated = (self.balance < 100 or self.current_step >= self.max_steps)

truncated = False

obs = np.array([self.current_price, self.balance, self.position], dtype=np.float32)

return obs, reward, terminated, truncated, {}

# Training the agent

env = HyperliquidTradingEnv()

model = PPO('MlpPolicy', env, verbose=1, n_steps=2048)

model.learn(total_timesteps=10000)

# Test the trained agent

obs, _ = env.reset()

for _ in range(20):

action, _ = model.predict(obs)

obs, reward, terminated, truncated, _ = env.step(action)

print(f'Step: Price ${env.current_price:.2f}, Balance: ${env.balance:.2f}, Position: {env.position}, Reward: {reward:.4f}')

if terminated or truncated:

obs, _ = env.reset()This setup empowers your agent to learn optimal strategies autonomously. Strategically scale by integrating Hyperliquid’s real-time API for live data, tuning hyperparameters, and adding risk controls like stop-losses to transition from simulation to profitable deployment.

Hyperliquid’s low-latency API demands efficient inference; clip actor-critic networks to under 10ms per decision. Backtest against that tight 24h range – $81,169.00 lows to $84,587.00 peaks – rewarding agents that sidestepped false breakouts. My cross-market lens: fuse RL with ensemble methods, layering momentum indicators for crisper signals in perps’ noise.

Step-by-Step: Deploying Your Reinforcement Learning Crypto Bot

Once trained, monitor Sharpe ratios exceeding 2.0 on validation sets. HyperAgent’s telemetry inspires: log episode rewards, visualize policy entropy. Scale to multi-asset perps – BTC, ETH – diversifying beyond single-pair exposure. In uncertain times, this autonomy frees you for macro plays.

Yet RL isn’t plug-and-play. Overfitting plagues naive implementations; combat with domain randomization, simulating slippage at $83,786.00 volumes. Partial observability from order books? Recurrent nets like LSTM policies ingest sequences, predicting funding flips. Pair with Freqtrade’s edge for hyperparameter sweeps – grid search learning rates from 1e-4 to 1e-3.

RL agents don’t just trade; they evolve with Hyperliquid’s meta, turning $2.63 trillion volumes into personal alpha.

GitHub gems accelerate: HyperLiquidAlgoBot’s strategy executor slots RL policies seamlessly. TradeLab. ai’s logic builder prototypes reward shapes sans code, bridging to full ML. Chainstack tutorials grid-out initial positions, RL refines entries. For DeFi purists, on-chain RL via hyperliquid-py keeps custody intact, dodging CEX pitfalls.

Risk management anchors everything. Enforce VaR limits at 5% daily, circuit breakers on 10% drawdowns. In my asset management days, unchecked leverage wrecked portfolios; RL’s learned conservatism – dynamic stop-losses tied to volatility – rebuilds that discipline. Current BTC stability at $83,786.00 with a mere -0.002440% dip rewards patient agents, punishing FOMO longs.

Future-Proofing Your Perps Edge

Hyperliquid’s bot vanguard – from GoodcryptoX’s trailing futures to 3Commas’ vigilant rules – sets the stage for hybrid RL. Imagine Perpsdotbot’s NLP frontend querying ‘optimize longs above $83,786.00’, spawning adaptive agents. Mizar’s copy-trading evolves into policy distillation, cloning top RL performers.

Strategic pivot: allocate 20% portfolio to RL perps, blending with spot HODL. Test on paper trades first, scaling as win rates hit 60% and. Women in finance like me champion this: tech democratizes edges once gated by quants. Hyperliquid’s perp dominance, fueled by automation, invites you to architect resilience.

Embrace these AI agents hyperliquid perps, and watch rigid trades yield to fluid mastery. Your portfolio, diversified and dynamic, thrives amid BTC’s poised $83,786.00 vigil.